Adopting AI Agents for Government and the Public Sector

As we all know, AI agents are quickly transforming the way all kinds of organizations operate.

However, many teams still face major blockers to AI adoption. This is particularly evident in the public sector, where a range of security, privacy, and commercial issues make rolling out AI-powered systems particularly difficult.

Today, we’re examining the key issues that are faced by public sector organizations, which can impede AI adoption, along with some of the technical requirements that these issues can be translated into.

Specifically, we’ll be covering:

- What are AI agents?

- Government use cases for agentic AI

- Public sector requirements for AI systems

- Tool stacks for government AI agents

Let’s start with the basics.

What are AI agents?

AI agents are LLM-powered software systems that can independently reason and carry out actions in response to an instruction.

This works by providing an AI model with access to the tools and data it needs to perform relevant tasks, along with guidance on how these are to be used within specific workflows and processes.

As such, agent behavior is generally determined by natural language instructions. This includes the core outline of the task at hand, the inputs the agent can expect, how and when specific tools can be used, and the outputs that are required.

Depending on the specific use case, agents can be deployed in a few different ways. The most obvious is as end-user-facing chat tools. This means that users can interact with agents in real-time via AI chat UIs, in much the same way they’d communicate with colleagues.

However, many agents are also invoked as part of wider automation rules. For example, these may be triggered following a system event, API request, or database operation. This allows us to take advantage of agentic reasoning in a wider range of more controlled scenarios.

Some agentic systems can follow more advanced design patterns. For example, multi-agent systems that comprise several task-specific agents working in tandem, or RAG agents, which are able to retrieve information from files and documents in order to inform their responses.

How is this different for the public sector?

As we hinted at earlier, adopting agentic AI in government organizations presents several unique challenges that teams in other industries don’t necessarily need to deal with.

One huge aspect of this is the fact that public sector workflows often handle highly sensitive information. For instance, relating to pensions, health records, citizenship, or other categories of personal information.

This is a particular challenge in the context of agentic AI, because it creates restrictions on how and when data can be shared with LLMs - especially where these are offered as a cloud-based service.

Similarly, many government organizations have heightened requirements around data residency. This means that they must tightly control how and where their data is stored, including hosting for any services that might process this data.

As we’ll see a little later, this often translates into public sector teams prioritizing agent solutions that enable them to select LLMs that are appropriate for their needs, especially open-source or self-hosted models.

Of course, in many government workflows, there are also important requirements around auditability, human-in-the-loop, access control, and other security issues, especially where agents are able to interact with mission-critical data and systems.

Government use cases for agentic AI

Understanding why AI adoption in the public sector presents unique challenges, it’s also helpful to consider some of the key use cases here.

Of course, as with any other kind of organization, government orgs handle a huge variety of workflows, which it would be impossible to list comprehensively. Instead, it’s more useful to consider the broad categories of these and where AI agents fit into each.

Perhaps the most obvious cluster of use cases here is citizen services. These are end-user-facing use cases, which typically take the form of a request and approval workflow, for example:

- Triaging and routing incoming requests,

- Handling information retrieval and self-service workflows,

- Guiding users through claims, submissions, and eligibility,

- Providing updates on ongoing claims,

- Replicating front-line support interactions.

Another key cluster of use cases here is internal operations workflows. This includes the bulk of administrative tasks that are performed within public sector teams, without necessarily having a user-facing component. For example:

- Parsing and processing documents,

- Assisting with case management and routing,

- Updating records and knowledge systems,

- Handling internal ticketing and support functions,

- Retrieving information from disparate internal systems,

- Drafting summaries and reports,

- Coordinating tasks and workflows across departments and teams.

Lastly, governments are increasingly utilizing agentic AI for data management and monitoring use cases, especially when dealing with large datasets. This includes:

- Anomaly detection,

- Compliance monitoring,

- Assisting with audits,

- Pattern recognition,

- Risk detection,

- Early warning systems for incidents and irregularities.

As we can see, public sector AI agents can potentially handle quite a diverse range of use cases. As such, it’s crucial to be conscious of the requirements of individual use cases, in tandem with the broad issues we outlined in the previous section.

Public sector requirements for AI systems

With a better grasp of the situations where we’re most likely to deploy AI agents within government workflows, we can move on to thinking about the specific decision points we’re going to encounter when evaluating different solutions on the market.

We’ve broken this down into a few key functional areas.

Data residency & hosting

Our first category of requirements is something that we already hinted at earlier. Data residency refers to the extent to which we can control the location and jurisdiction of our data storage.

Often, public sector organizations are required to host their data in specific locations. For example, European governments storing their data on EU servers.

This can be achieved in a few distinct ways, depending on the depth of our requirements.

On the one hand, many SaaS-based platforms offer the option to select a cloud region for hosting. For some teams, this may be a workable option for achieving their data residency requirements without the overhead of managing their own infrastructure.

However, many government use cases will require an even higher level of control over data residency, in the form of self-hosting and on-prem deployments.

While this is also true of most other types of software systems, it introduces some particular challenges in the case of AI agents. Specifically, a truly self-hosted solution will also require us to utilize a language model that we can deploy on-prem.

So, we’ll first need an agent-building toolstack that enables us to connect to on-prem models. We’ll also need to account for the infrastructure costs and effort associated with deploying our own model.

Model selection

Following on from what we’ve just discussed, another key decision factor for public sector AI agents is model selection.

There are a few distinct angles that we’ll need to consider this from. Firstly, as we just saw, many government organizations have a preference for open-source or other self-hostable LLMs.

Where this is the case, it becomes critical to consider the hosting requirements of the model itself. That is, LLMs come in a variety of different sizes, measured by their parameter count. Broadly speaking, larger models will have higher compute requirements and therefore, associated costs.

At the same time, this also impacts the performance of individual models. So, we need to balance the resources we’ll incur when hosting models with the capabilities that we need within our workflows.

This requires careful consideration of our requirements, including assessing the general and task-specific performance of our chosen LLMs.

Integrations

Integrations are another key factor when assessing our options for government AI agents. The crux of this is that, in order to operate effectively within our workflows, agents must be capable of connecting to our wider toolstack in order to retrieve information and trigger actions.

This is known as tool calling. Essentially, we provide the agent with access to tools that it can invoke in response to a request, either via a collection of API endpoints or an MCP server. We’ll also need to provide guidance on how and when each tool can be called.

While this also applies within non-government use cases, there are a few additional factors here that might come into play, relating to the kinds of tools that public sector organizations rely on within their workflows.

One potential issue is that government teams often have a relatively high level of reliance on legacy systems. So, we’ll need to be conscious of this when choosing AI agent tools that are capable of connecting to a wide range of platforms, including via custom API requests or middleware tools.

Additionally, we may encounter heightened security requirements when connecting to third-party tools, especially within more sensitive government workflows, which any agent building tools we’re vetting will need to comply with.

Licensing terms

As with any other type of software system, another important issue here is licensing. Specifically, public sector IT teams are generally constrained by tighter requirements here when assessing software vendors.

This can come in a few distinct forms.

Where software tools are offered on a commercial basis, governments are often required to purchase via a tendering process, where multiple vendors can submit bids to demonstrate how their products meet the project’s requirements.

The goal of this is to ensure that purchased software not only meets the relevant technical requirements, but also complies with legal, financial, and other regulatory regulations. However, tendering can also be a lengthy process.

Related to this, many government IT teams show a preference for open-source software solutions. On the one hand, this is important from a security perspective, as teams can inspect the source code of any platform that will interact with mission-critical data and services.

At the same time, approval processes for open-source software can be less stringent, as the software itself is often free to use.

You might also like our round-up of the top open-source AI agent platforms .

Agent-building experiences

Of course, the actual experiences on offer for building agents within individual platforms are also a critical consideration.

There are a couple of key categories of solutions that we can distinguish between here.

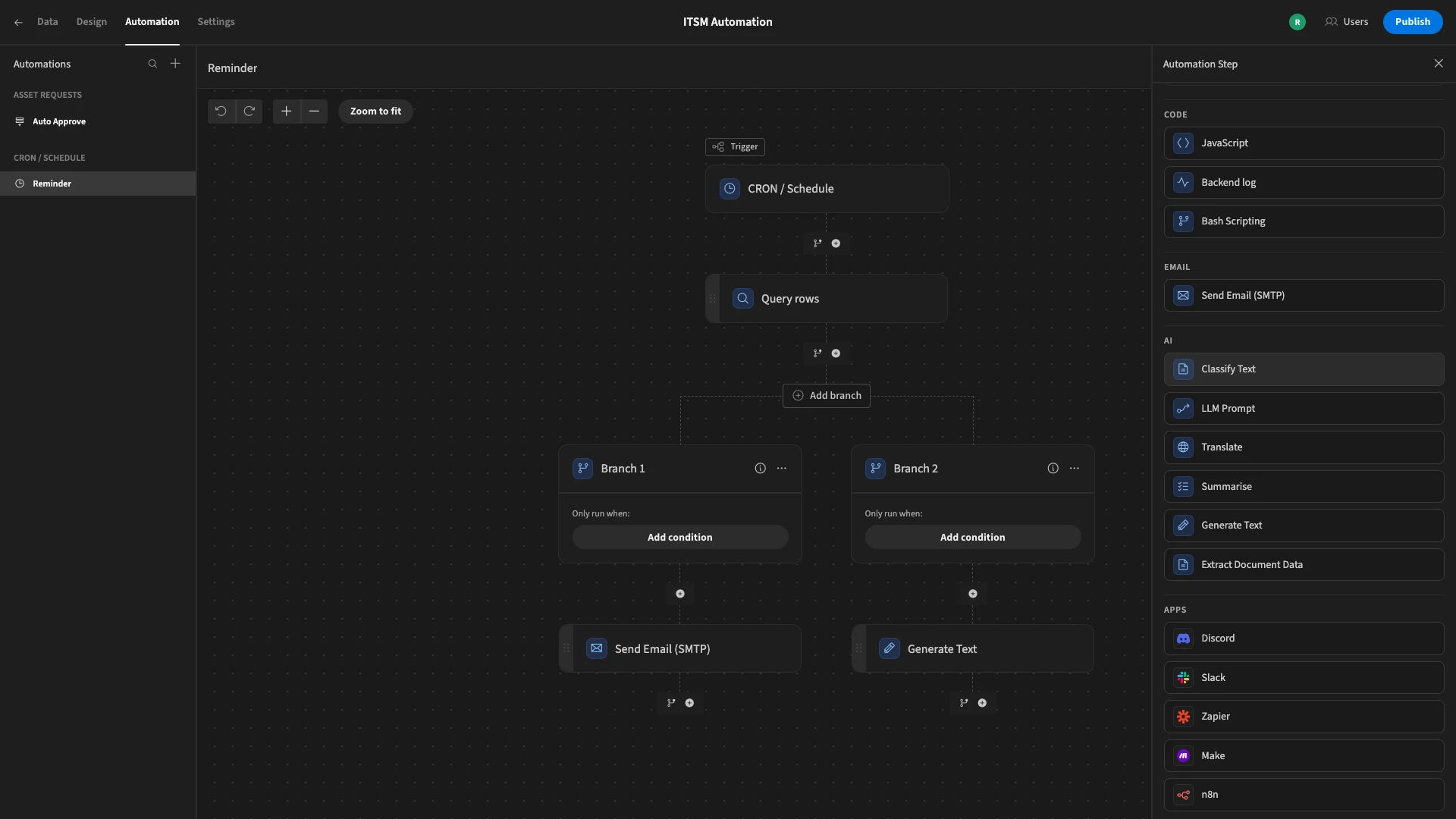

First, many teams deploy agents using what are known as AI agent frameworks. Essentially, these are code-based tools that expedite the agent-building process by abstracting away boilerplate code.

So, these can provide extensive flexibility to build custom agents, but we’ll still require the requisite coding skills to build and maintain solutions.

The other category can be thought of as no/low-code agent builders . These largely rely on visual development and natural language experiences for building agents. As such, they can be more viable options for a range of colleagues to manage, although we might sacrifice some in-depth customization compared to more code-centric tools.

Therefore, our choice of which platform is most appropriate will need to be informed both by both the requirements of our use case and the types of colleagues that we will task with implementation.

Tool stacks for government AI agents

With a solid understanding of the decision points we’re likely to encounter when assessing different solutions, it’s also useful to think about the individual components that make up an effective government AI agent system.

As we’ve hinted at already, perhaps the most fundamental aspect of this is our LLM. This determines a range of important elements of our agents’ success, including their technical performance, capabilities for performing specific tasks, and computing costs.

As we know, many government teams will opt for self-hosted options here. Check out our guide to open-source LLMs to learn more.

With our chosen LLM, we’ll also need capabilities for defining agent behavior and connecting to third-party tools and systems, whether we opt to do this using an agent framework or an agent-builder platform, such as Budibase.

For certain use cases, we may need more advanced capabilities. In particular, if our workflow requires our agent to interact with files and documents via RAG, we’ll need to select an appropriate embedding model and vector database to handle this.

Take a look at our guide to retrieval augmented generation to learn more.

On top of this, we’ll need to consider how agents can be invoked within workflows, along with how end users can interact with them, including triggering agents, providing feedback during workflows, or inspecting outcomes.

This can come in several forms. Most obviously, we might opt to enable interactions with our agents via an AI chat UI. Alternatively, for more tightly structured workflows, we might facilitate user interactions via more traditional UIs, such as forms and admin panels.

For example, where agents are invoked to perform defined processes in response to database events, such as tickets being created.

Additionally, to be viable in government use cases, AI agents will require additional controls, such as human-in-the-loop approval mechanisms for proposed actions, as well as effective logging and observability tools.

The open-source AI workflow toolkit for privacy-first teams

Budibase is the open-source AI workflow toolkit for privacy-first teams. We offer support for all kinds of databases, APIs, and tools, alongside our app and automation-building tools.

IT teams in all industries choose Budibase to handle all kinds of internal workflows, including approvals, ticketing, request management, and more.

Check out our features overview to learn more.