Low code, Vibe code & us

As AI Code generators and vibe coding dominated the narrative, low-code platforms looked on as the unloved child, missing the spotlight.

We, and (so I’ve heard) other low/no-code platforms, felt a cooling and squeezing of our market. Customers wanted to “wait and see what happens with AI”, before committing to any big contracts.

The most obvious move for any low-code product to take was to add prompt-to-app capabilities - vibe-coding low-code.

Some platforms have added these capabilities, but we have not - a conscious decision. I believe that vibe-coding low-code can be a fantastic onboarding tool, but it will not win in the end. We need to place our chips with conviction - “hell yeah, or no” - and choose to place our bet elsewhere at this stage (I’ll talk about this later).

Is AI code generation a threat to low-code?

Low-code exists because code is hard - or at least time-consuming. When we use low-code to build software, we are making a tradeoff: ease of use for flexibility. The sliding scale from nocode (easy) to assembly code (hard) is tethered to the sliding scale from rigid & narrow-use to flexible & broad-use.

LLMs have broken this tradeoff in a way that nobody thought possible. Code remained flexible, and did not get easier - but, with LLMs to assist, the developer got smarter. Low-code can never be as flexible as code - it fundamentally does not make sense.

When choosing how to build your app, you already know that it’s possible with code, whereas low-code will take longer to prove. If there is any doubt that a low-code platform can handle a complex use case, then code might immediately be the easier option.

And, to pile on the pressure, AI Code generators ship a codebase that you can run for zero ongoing licensing costs, versus a pricy ongoing license fee for low-code.

So, why not do prompt-to-app, in low-code?

The flexibility of code is what makes vibe-coding particularly powerful - AI enhances the developer’s capabilities without compromising flexibility. The ceiling is much lower for low-code, therefore prompt-to-app just isn’t as useful - although it can be a nice onboarding feature.

It is also likely to be impossible to train an LLM as accurately as it can be trained on code. There is simply not enough low-code training data available compared to the vast amounts of publicly available code repositories, complete with comments, tests, and documentation. AI is better at Python than proprietary JSON blobs.

The case for low-code

For less complex use cases, low-code remains favourable - many organisations prefer not to manage and deploy a codebase for simpler use cases. Where the “simple” boundary lies is very much dependent on a person’s experience or access within an organisation.

Deploying any code carries risk. Low-code (unintentionally) brings constraints that often lower the risk; developers are only allowed to build what is allowed by the low-code platform - guardrails - versus literally anything with code.

Low-code platforms also provide a layer of control and governance, allowing you to manage all your databases, automations, apps, and agents in one place, with shared sets of security rules and authentication.

For simpler use cases, low-code will likely be more maintainable going forward. Anyone can quickly, visually, understand the logic, and make some changes. Maintaining code still requires a software engineer, however strong the vibes may be.

The result

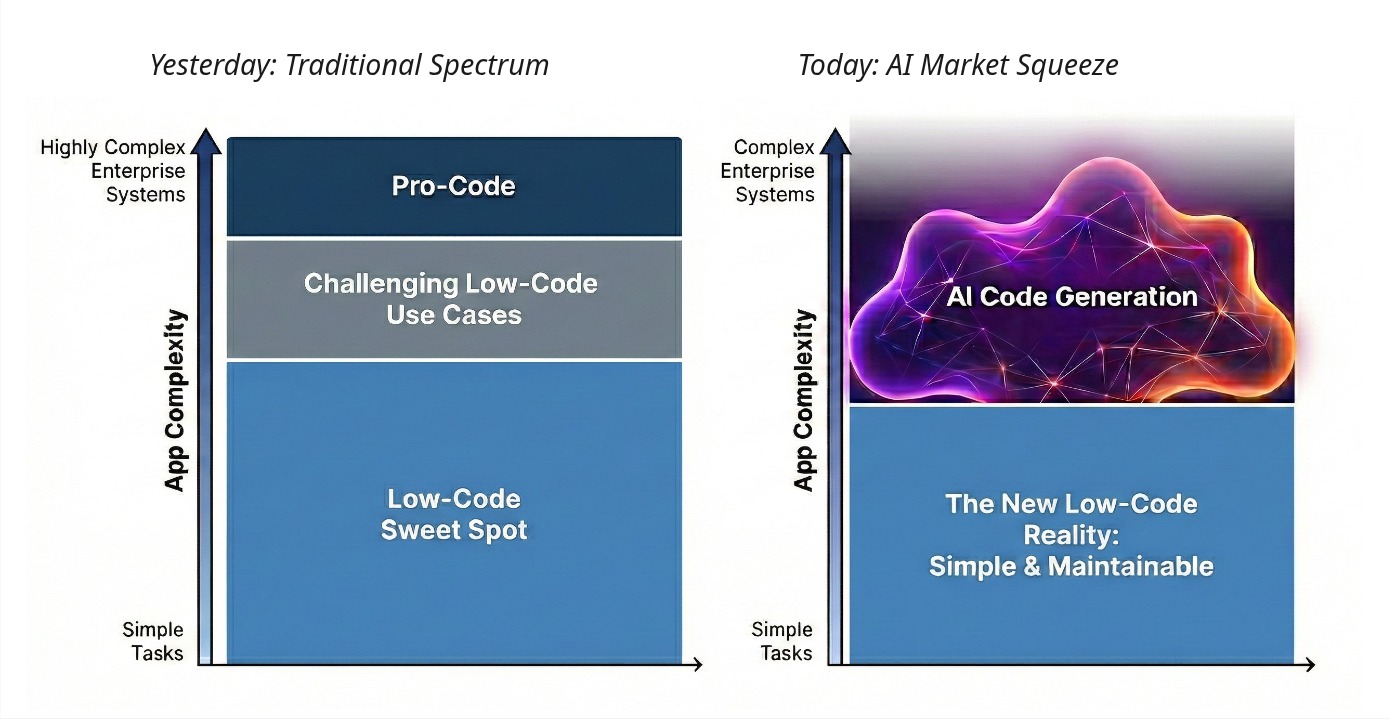

AI is already squeezing out the top-end of complex use cases from low-code, as developers become more productive. Many of these use cases pushed the boundaries of low-code before and arguably should never have been built with code at all.

All low-code platforms must now evolve. App-building alone can no longer be your only offering - it must be a tool in the toolkit. All low-code platforms must diversify their product offerings - apps, and more.

The future of Budibase

Low-code app building might be getting tough, but the “Why?” has never been clearer for us - and it hasn’t changed either. Budibase has (and always will) serve the purpose of digitally transforming business workflows.

To be more specific, we allow privacy-first teams to deploy internal workflows with trust and confidence.

Openness and connectivity are in our DNA

- Budibase is open-source.

- Budibase is very friendly to self-managed deployments.

- Budibase connects to a wide range of databases and APIs.

Your data can be kept inside your secure network.

Our vision for Budibase is to become a privacy-first workflow toolkit. Budibase Apps are an important tool in the toolkit. We’ve recently introduced workspaces, which enable data sharing and allow Automations to operate independently. The next tools we’ll add are AI Agents and Chat interfaces - a key interface of the future - AI with humans in the loop.

The future is already here for many of us. However, many public sector and regulated industries worldwide are still unable to access AI chat interfaces, let alone connect their private data to them. We know these organisations want to join the AI era, but they can’t just ‘vibe’ with their private patient data or government records. They need a secure harness.

At Budibase, we aim to bring AI-assisted workflows to privacy-focused teams. We’ll allow you to connect your internal data to LLMs of your choice - including self-hosted / private LLMs. We’ll apply security rules on top of the LLM outputs to ensure that models don’t leak data to insiders who shouldn’t have access.

I’ll be writing more detail on the future of Budibase very soon. Come and join us on Discord - I’d love to hear your opinion.