Top 10 Data Transformation Tools

Selecting the right data transformation tools is a critical decision - with far-reaching consequences.

Nowadays, the idea that data is your business’s most valuable asset is a bit of a cliche. The real challenge is getting this data in the hands of the right people, with the right tools, in a usable form.

This is especially tricky in a context where we’re increasingly dealing with diverse, varied, and large-scale data sets.

Today, we’re diving deep into choosing the right data transformation software - including our top picks from across the market - alongside how you can make the right call for your particular needs.

Let’s jump right in.

What are data transformation tools?

Data transformation tools are platforms that prepare data for use. In the most high-level terms possible, this means altering or updating data - bringing it from one state to another.

So, specific examples of transformations include:

- Reformatting.

- Aggregation.

- Cleansing.

- Combining data sets.

- Modeling.

- Restructuring.

- Validation.

- Filtering and creating views.

- Reduction and generalizing.

- Enhancements and handling missing values.

- Altering values.

- And more.

The goal is ultimately to take data from its source and get it into a state that’s appropriate for a target application. For example, when we send information from our data lake to an off-the-shelf analytics platform, we might need to transform it into a supported format.

Why do we need data transformation?

So why is this important? The basic challenge of working with complex, multi-source data sets is that we’ll often have information relating to similar entities or phenomena in different locations and data formats.

For example, if we want to gain a full picture of our customer’s behavior - with data from our CRM, ecommerce store, marketing platforms, and product analytics.

This creates a big barrier to actually extracting value from our data assets - since we can’t aggregate, combine, or present this data together without first establishing interoperability.

With that in mind, data transformation achieves a few important benefits.

This includes:

- Ensuring that data is optimally organized - Making it easier to understand for both humans and computational tools.

- Maximizing data quality - Validation, cleansing, and reformatting help to prevent a range of errors, including those associated with stale data, null values, incorrect indexing, duplication, and corruption.

- Creating compatibility - Allowing our data to be used by a variety of platforms, applications, and processes, without needing to alter its underlying source.

- Improving technical performance - Decluttering data sets improves technical performance and our ability to garner usable insights.

- Scalability - Effective transformation can remove bottlenecks from our data flows, enabling us to scale our assets and associated processes more easily.

These benefits are particularly stark when we take a step back and think about the technical environment that businesses today are operating in. This is characterized by huge numbers of SaaS platforms, distributed data management, and fast-paced technological change.

(EdQ )

Therefore, data transformation is a core part of how we integrate these nodes, de-silo data, and maximize organization-wide productivity.

Types of data transformation software

Now, before we jump into our favorite platforms, it’s useful to think briefly about the different use cases, end users, and market segments that specific vendors are targeting.

Under the umbrella of data transformation tools, there’s actually a huge degree of variance in terms of capabilities, functionality, scope, and positioning.

Which is right for you depends on a range of factors, including your budget, the size of the organization, the scope of your data transformation projects, and the technical skills and resources you’re able to dedicate.

The first cleavage we can point to is between full-scale data transformation tools that are used for large-scale transformations and creating data pipelines - and solutions that are used to transform data at a more granular, discrete level.

But what are the specific market segments we need to know about?

Most often, data transformation tools fall into one of four categories:

- Enterprise transformation tools - COTS solutions that are aimed at large organizations which want to implement wide-spread data transformation capabilities, with minimal configuration work.

- Open-source transformation tools - Platforms that allow IT teams to develop their own ETL processes, including modifying the platform’s underlying source code.

- Custom transformation solutions - Transformation solutions that are built from scratch by organizations - often for very specific use cases.

- Transformation capabilities within integration platforms - Data transformation capabilities that are native to other platforms, including integration platforms as a service, API management, or other solutions for transferring and managing data.

So you can see, the kind of data transformation tools you’re going to need for integrating two SaaS tools is going to be very different from the solution you’ll need to centralize and warehouse all of your organizational data as part of an ETL process.

Top 10 data transformation tools

With that in mind, let’s check out our top picks across each of these four distinct market segments.

Enterprise data transformation

First up, let’s check out some full-fat platforms to extract, transform, and load data.

Remember, these are positioned towards enterprises and other large organizations that need to perform widespread transformations - often as part of their data centralization and warehousing efforts.

Here are our picks.

1. Snowflake

Snowflake positions itself as a unitary platform for centralizing, de-siloing, storing, and leveraging data in the cloud. Its core offering is a data storage and warehousing solution for warehousing structured, unstructured, and semi-structured data.

Snowflake’s elastic processing engine offers a secure, performant, and relatively pain-free solution for building and running pipelines, for all kinds of data transformation applications.

Stored data can also be queried using SQL-like syntax - or a host of other query languages - making Snowflake the ideal solution for managing large, varied data sets in a range of analytical, development, and strategic contexts.

2. Google BigQuery and DataFlow

BigQuery is Google’s data warehousing solution. DataFlow is its stablemate - a dedicated platform for large-scale data processing. Together, they are a strong option as part of Google’s Cloud platform.

Part of BigQuery and DataFlow’s popularity relates to their support for SQL and API-based custom querying within pipelines, as well as the overall cost-effectiveness on offer for building cloud-based data solutions.

However, support for third-party data is a little bit spottier than some of their competitors - making this more of an attractive option for businesses that are deeply embedded in Google’s ecosystem, to begin with.

3. AWS Glue

Glue is a serverless ETL tool within Amazon’s Web Services suite - with a particular focus on analyzing and categorizing data - in tandem with S3 and Athena. Glue’s Crawlers are capable of inferring schemas of connected databases and tables.

AWS Glue also supports querying and pipeline configuration using a range of DML and DDL languages via the Athena Query Editor.

Overall, Glue is a stable and reliable transformation tool, with a particular focus on empowering developers to build data catalogs. Again, though, you can likely see how Amazon seek to lock us into their ecosystem by prioritizing integration with other AWS platforms.

Open-source data transformation

Next, let’s think about some of the open-source data transformation tools that are available. Remember, the idea here is to provide more flexibility and extensibility than their COTSs alternatives.

4. Airbyte

Despite being a relatively new player, Airbye is already cornering a huge share of the data transformation tools market. Developers in particular love its huge range of external integrations, pricing transparency, and flexibility for creating data transformation solutions.

Airbyte offers both a cloud-based platform and the option to self-host. There’s also an active community of developers, users, and other data professionals - helping to build, document, and maintain a huge array of data connectors.

One potential downside is that Airbye is a relatively early-stage solution - meaning that even if some capabilities are possible - they might not be particularly well documented - potentially creating barriers to getting up and running than some of the alternatives - at least in niche cases.

Check out our round-up of open-source development tools .

5. Apache Kafka

Apache’s Kafka is a powerful, open-source platform for event-driven data pipelines and transformation flows. It’s based on five core APIs for common real-time data transfer and analysis functions.

It’s a clear winner for businesses that need scalable, reliable, and performant data transformation solutions for real-time applications and other mission-critical use cases.

It’s worth noting however that Kafka is targeting a much tighter range of applications here than some other data transformation tools - meaning that it will also be of more limited use in traditional, relational data management scenarios.

Tools for custom transformation solutions

So far, we’ve mainly seen platforms that are used for creating large-scale, high-volume data transformation pipelines. But, this isn’t the whole picture.

Far from it.

It’s just as important to have the tools you need to build more individualized, granular, or context-specific transformation processes.

In these cases, we need the right tools to build custom solutions to transform our data as we pass it between tools.

Let’s check out our hard-coded options first.

6. Python

Python is the preferred programming language for data professionals around the world. This is because of the comparative ease of working with indexed data structures and dictionaries - both of which are important in the context of building ETL processes from scratch.

It also offers a huge amount of versatility, extensive native data structures, and a relatively forgiving syntax.

However, it’s worth noting that Python isn’t the only show in town. Just as many developers build ETL tools using other languages - including JavaScript.

So, while Python is the most ubiquitous language for data transformation use cases, you’ll still want to make a decision based on your own experience and needs.

7. Postgres

Of course, if we’re building our data transformation solution from scratch, we’ll still need to account for data storage and management.

Postgres is an open-source, enterprise-grade database management system that offers full support for SQL and JSON-based querying.

It’s one of the most ubiquitous DBMSs on the market, acting as the basis for countless big data, analytics, web, mobile, and other applications - making it the ideal vehicle for storing information as part of custom data transformation application projects.

Take a look at our guide to choosing the best database management software .

Transformation within integration platforms and other tools

But what if we don’t want to hard-code our transformation solutions? After all, plenty of non-developers need to integrate tools - so it follows that they’re going to need to be able to implement data transformation tools.

So, let’s check out some of the platforms that can empower less technical colleagues to transform data as part of their integration efforts.

8. Zapier

Zapier is probably the most popular, well-known integration and workflow automation tool on the market. It’s not a dedicated data transformation platform as such, but it is a viable way for less technical colleagues to build limited flows between other applications and data sources.

Basically, any SaaS platform, data source, or other node that supports WebHooks can be connected as part of a Zapier automation flow, and combined with native transformational logic - before being sent onwards to another external system.

Obviously, there are limits to what’s achievable here, and Zapier won’t be a viable solution for more complex transformation tasks. But, there are still plenty of simpler use cases where it will be more than sufficient.

9. Postman

Postman isn’t quite a traditional data transformation platform either - it’s an API management tool. But - it’s an API management tool with comparatively sophisticated data transformation functionality.

In fact, using Postbot and Postman’s native Flows Query Language, it’s possible to perform all kinds of manipulation, restructuring, conditional selection, parsing, and other complex actions on data as it’s passed between platforms via API requests.

This makes Postman the ideal solution for implementing transformations during API-based data transfer, minimizing the need for additional middleware.

Check out our guide to REST API authentication .

10. Budibase

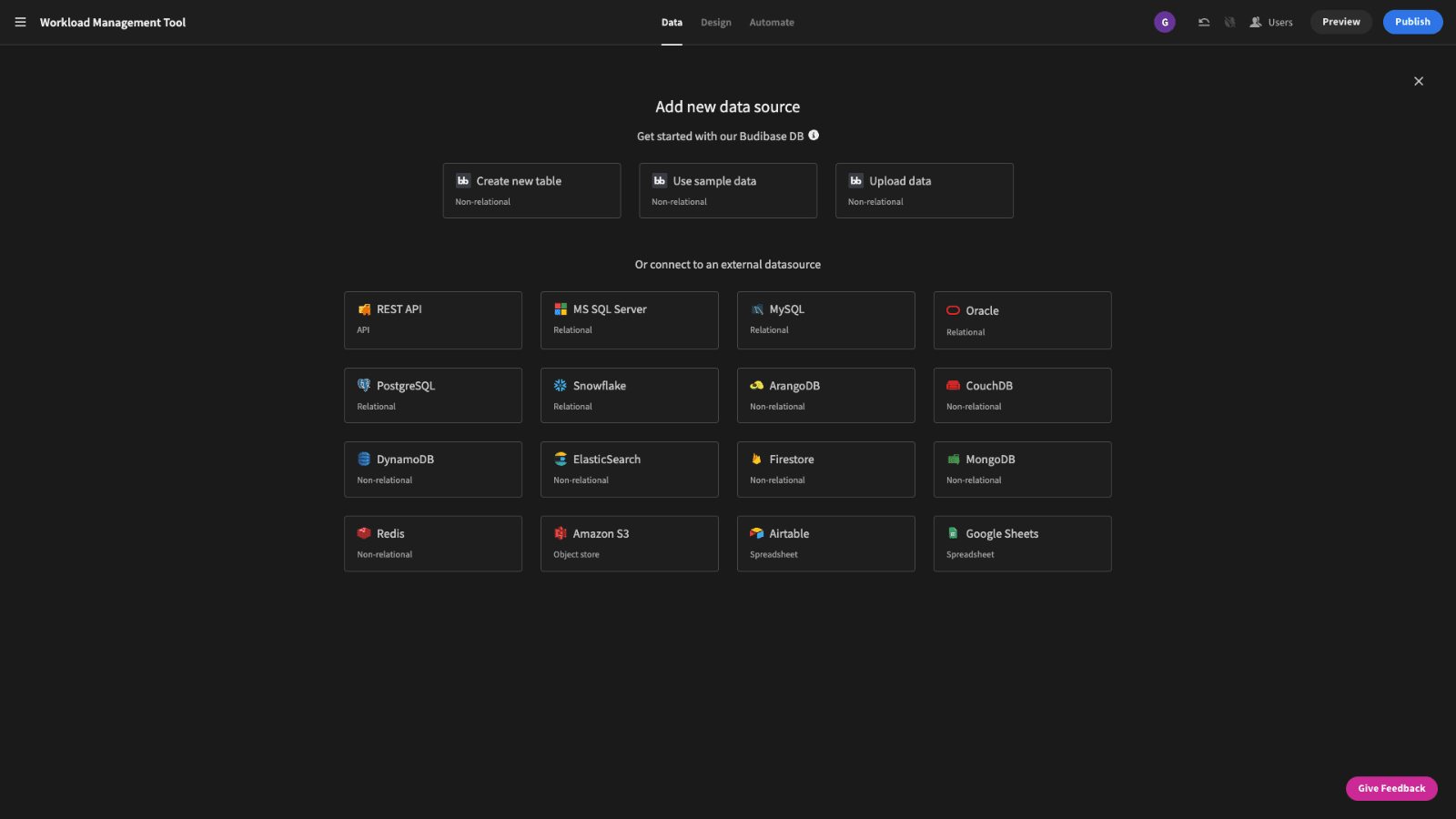

Finally, we have Budibase. Our open-source, low-code platform is the ideal solution for creating all manner of custom applications - including extensive data transformation, manipulation, aggregation, and integration capabilities.

We offer dedicated data connectors for Postgres, SQL, S3, Airtable, Google Sheets, Couch, Mongo, REST API, and many more - with custom queries, JavaScript-based transformers, and autogenerated UIs for manually managing data.

Budibase’s built-in automation editor makes it easy to craft complex transformations leveraging all kinds of connected data sources, without writing a single line of code. Take a look at our features overview to learn more.

Supporting data transformation efforts

Now, we’ve seen a broad spectrum of tools that fill different gaps across the data transformation tools market. But - choosing the right tools is only part of the battle.

So, what other considerations are there when transforming data - and how can we put these into practice to maximize our ROI?

Here’s a selection of the other strategies to help data teams breed efficiency and insight across the organizations.

Transformation data modeling

First, it’s important to consider how we plan and strategize around data transformation. The most important step here is creating a data model . That is - a detailed plan of all the attributes we store, how they interact and relate to each other, and what they’re used for.

This is vital at all stages of our data transformation initiatives.

So, in the first instance, our task is to outline all of the data assets that we need to account for in our project - whether this is at the scale of creating an enterprise data model - or a more granular, process-specific model.

Then, we can use this information to determine the exact transformations we’re going to require to integrate these different data sources or achieve interoperability.

Security, storage, and hosting

We’ll also want to account for how we store, host, and access data - both as stored in its raw form - and post-transformation.

For example, many of the data transformation tools we saw earlier are cloud-based - meaning that data is stored on remote infrastructure by the vendor - either on a shared or private basis, depending on your pricing tier.

Others offer the option of self-hosting - allowing you to deploy data assets to your own infrastructure.

It’s also important to consider which methods you’ll use to authenticate users and clients seeking access to your data assets, as well as authorization, access control, and any other strategies you’ll use to govern exposure to your data.

Outcome-focused data transformation

One of the biggest stumbling blocks for data teams is neglecting the real-world impact of their efforts - focusing instead on technical or abstract issues.

The key here is remaining cognizant of how data is used in workflows, how it can inform decision-making on the ground, and the specific interactions that are most important to users at all levels of the organization.

To learn more about how we can turn data into action, check out our in-depth guide to digital transformation platforms .