10 Self-Hosted AI Tools

AI is becoming central to all kinds of business processes. This offers almost unprecedented potential for efficiency and improvements within workflows.

At the same time, though, businesses are constrained by several considerations that can affect their choice of solutions.

For large organizations, one of the most prominent examples of this is self-hosting.

That is, many enterprises and other security-focused organizations place a high level of emphasis on solutions that can be hosted on their own infrastructure, especially where these relate to mission-critical data or processes.

This is particularly important in the context of AI tools, given their centrality to a range of business processes, as well as comparatively high requirements for connecting to data.

Today, we’re covering everything you need to know, including:

Let’s jump right in.

What is a self-hosted AI tool?

This is something of a broad term. A self-hosted AI tool could be any LLM-powered system or component of our wider AI tool stack that we deploy and host on our own infrastructure, as opposed to accessing it as a cloud-based service.

In other words, this could include elements we use to build AI systems from scratch, such as LLMs or vector databases, as well as AI-powered end-user solutions or visual development tools such as no/low-code AI app builders.

While the drive to self-host exists across a huge range of solutions, it’s important to note that it can present a few key challenges in the context of AI.

In large part, this can come down to the comparatively high computational requirements of AI systems, compared to other types of software. For example, running an LLM locally is often highly resource-intensive, especially for models with larger parameter counts.

So, in many instances, businesses may opt for configurations where some elements are self-hosted, but other capabilities still rely on calling out to a cloud service.

For example, we might use a self-hosted tool to build automation rules or configure the behavior of an AI system, but still utilize external services to actually connect to an LLM.

Alternatively, in some highly-sensitive use cases, we might need the entirety of the system to be hosted on-prem, including our LLM. For this reason, we’ll see a couple of options for building these kinds of solutions a little later when we come to look at specific self-hosted AI tools.

Why would we want to run AI tools locally?

First, though, it’s worth considering why self-hosting is such a priority for many businesses, especially in the context of AI.

In other words, what benefits do self-hostable solutions offer us over their wholly cloud-based equivalents?

In one way, this comes down to control.

More specifically, self-hosting provides us with complete control over how our tools are deployed, including any security or auditing measures we may wish to put in place.

This is particularly important in an enterprise context, where large amounts of the data and other resources that AI tools might need to interact with are already often self-hosted for security reasons.

Besides this, self-hosting can be more cost-effective in many situations, especially where maintaining and managing our own infrastructure works out cheaper than the recurring costs of licensing the equivalent cloud services.

Lastly, many teams prioritize self-hosting to remove their dependency on external services. For instance, enabling them to avoid vendor lock-in or other negative impacts of becoming overly reliant on external systems for mission-critical processes.

10 self-hosted AI tools

With a better understanding of what self-hosted AI tools are and why we might opt for them, we can begin to examine some of the specific options that are available to us.

Earlier, we hinted at some of the more granular types of self-hosted AI tools we might want to adopt. Following on from this, we’ve divided our picks by specific kinds of tools. Specifically, we’ve chosen the following clusters of tools:

LLMs

First up, we can consider our options for self-hosted LLMs . These will be at the forefront of many readers’ minds when it comes to self-hosting AI tools, but LLMs also present some unique challenges in this regard.

This is because LLMs are the aspect of AI systems that typically accounts for the largest computational footprint.

So, we’re constrained not only by which model is best suited to our specific needs and use case, but also in terms of the resources we have available to actually run an LLM.

We’ve chosen two families of models, each offering a range of models with different model counts, making them self-hostable in a range of different contexts.

Gemma 3

First up, we have Google’s Gemma 3 family of models. This currently comprises models with parameter counts ranging from 1 to 27 billion, making it a good fit for a range of use cases and environments.

Gemma models are also built on top of the same technology as Google’s better-known Gemini offering. As such, it performs effectively on a range of tasks, including question-answering, text generation, and instruction-following.

For offline use on edge devices such as smartphones, tablets, and laptops, Google has also recently launched Gemma 3n, built on top of the same architecture as Gemini 3 Nano.

Mistral

Mistral offers several powerful models that can be run on-prem. The recommended installation method is vLLM, a Python-based framework that exposes an OpenAI-compatible API for models.

One of Mistral’s most prominent models is Medium 3, a model aimed at enterprise usage, including support for on-prem or hybrid hosting, as well as integration into enterprise tooling, with very strong performance for its running costs.

Other models in Mistral’s offering include Large 2, a higher-parameter model, highly suited to function calling and code generation, along with Mistral Small 3.1, a highly flexible smaller model, suitable for both enterprise and consumer applications.

Frameworks

Having seen a few of the key options for self-hosted AI models, we can move on to our next class of tools, frameworks.

These are libraries of reusable elements and components that enable developers to more quickly and easily build custom AI-powered applications.

This includes tools that we can run locally, whether we’re calling out to an external or self-hosted model.

LangChain

LangChain is perhaps the most ubiquitous AI framework. This is based around a standardized interface for connecting to all kinds of models, tools, and databases, enabling us to build effective AI-based tools in a composable, adaptable fashion.

Available as both a Python and JavaScript/TypeScript framework, LangChain offers over 600 integrations, making it the ideal solution for implementing AI functionality within a wide range of development projects.

In particular, LangChain is a popular option for connecting AI models to data sources. This includes real-time data augmentation, enabling us to connect LLMs to a wide range of databases, APIs, and other sources within workflows.

AutoGen

AutoGen is a set of frameworks and other AI tools from Microsoft, suitable for experimentation, prototyping, or building production AI agents, including tools for building solutions using Python or visual development.

For production systems, we’ll need to use the AutoGen Core framework. This is an event-driven framework for building multi-agent systems, including handling deterministic or dynamic workflows.

AutoGen is also a highly extensible framework, with tools for connecting to a wide range of models, MCP servers, and more.

AI platforms, automation tools, and app builders

Next, we have a somewhat different class of self-hostable AI tools. Broadly speaking, these are tools that enable us to build AI solutions without necessarily requiring extensive technical abilities or coding skills, usually relying instead on low-code experiences.

This can take a couple of different forms. For instance, building automation rules, entire applications, or AI agents.

So, we’ve chosen platforms from across this segment. You might also like our ultimate guide to AI forms .

Budibase

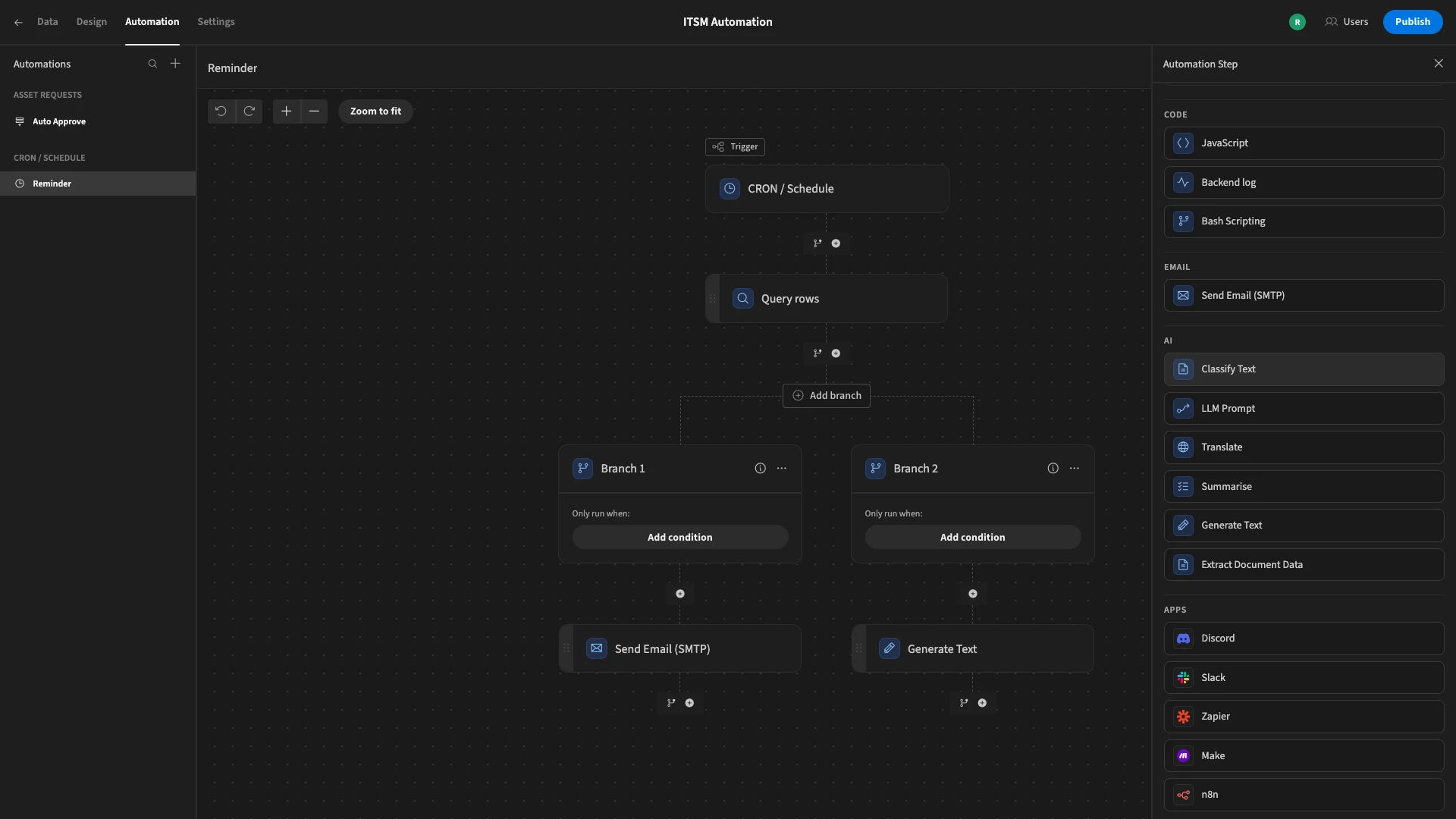

Budibase is the open-source, low-code platform that empowers IT teams to turn data into action. Security-conscious organizations choose our platform to build all kinds of AI-powered internal tools.

With extensive connectivity for RDBMSs, APIs, NoSQL tools, and more, alongside autogenerated UIs, powerful visual automations, comprehensive AI capabilities, custom RBAC, free SSO, and more, there’s never been a better way to build professional apps at pace.

Budibase is used by teams of all sizes to handle workflows including approvals, ticketing, portals, inventory management, and more.

Check out our features overview to learn more.

n8n

n8n is a hugely popular open-source automation builder, offering a visual experience for configuring workflows across a huge range of integrated tools. It’s particularly popular with more technical teams, offering extensive flexibility and scope for custom code.

In recent years, n8n has also positioned itself as a powerful solution for building AI agents, using visual development tools, including several pre-built templates and nodes for common real-world use cases.

In addition, n8n offers a wealth of testing and debugging capabilities, making it a powerful option for developers who want to build production AI tools.

You might also like our round-up of the top open-source AI agent platforms .

CrewAI

CrewAI is one of the leading platforms for transforming workflows with multi-agent systems. Within this, it offers a range of tools, including a coding framework and a low-code platform in the form of CrewAI Studio.

This offers multi-platform support, including self-hosting through Docker or Conda, alongside extensive connectivity for LLMs, data, and tools, all in a low-code interface.

On top of this, CrewAI offers extensive capabilities for tracking, monitoring, and iterating over solutions, making it a great choice for deploying multi-agent systems in a wide range of contexts.

Observability and management tools

Lastly, it’s important to consider additional tools that we might need to help us manage our AI systems in production.

In particular, we have several options for monitoring AI tools’ behavior and performance in real-world environments.

Here are two of our picks.

LangSmith

From the makers of LangChain, LangSmith is a unified observability and evals platform for AI systems. There’s close support for tools built in LangChain, LangGraph, or other tools and platforms.

It offers a powerful suite of observability and tracing tools, enabling us to understand AI behavior in non-deterministic workflows, in turn helping to improve the effectiveness of our solutions.

On top of this, LangSmith offers the Playground and Prompt Canvas UI, sets of tools for experimenting with prompts and LLMs. There’s also extensive functionality for creating dashboards, helping us to visualize performance.

Langfuse

Lastly, we have Langfuse. This is an open-source, wide-ranging LLM engineering platform with functionality for tracing, evals, prompt management, and monitoring. This positions it as a powerful solution for testing, debugging, and improving AI systems.

It’s a particularly impressive offering for prompt management, including composability and version control. On top of this, there’s a suite of tools for deriving datasets from production data for fine-tuning and testing.

Langfuse is also highly integratable, offering SDKs for Python and TypeScript, as well as native integrations for a wide range of libraries and support for OpenTelemetry.

Turn data into action with Budibase

Budibase is the open-source, low-code platform that empowers IT teams to turn data into action.

With connectivity for a huge range of RDBMSs, APIs, and NoSQL tools, alongside visual automations, autogenerated UIs, extensive AI capabilities, custom RBAC, free SSO, and optional self-hosting, Budibase is the perfect solution for building professional internal tools.